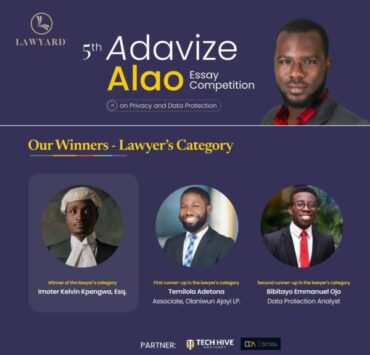

Assessing Nigeria’s Data Protection Readiness in the Age of Large Language Models (LLMS). By Bibitayo Emmanuel Ojo , Second Runner-up Lawyers Category of 5th Edition of Adavize Alao Essay Competition

Lawyard is a legal media and services platform that provides…

Introduction

In recent years, the proliferation of Large Language Models (LLMs) has revolutionised the field of artificial intelligence, enabling machines to generate human-like text at an unprecedented scale. While this technological advancement holds great promise for various sectors, it raises critical data privacy and protection concerns. Nigeria’s landscape is not exempted from this astronomical eruption of generative AI and Machine Learning. However, the question is, how prepared is Nigeria to protect the data subject and the ethical use of the LLMs? This essay aims to assess Nigeria’s preparedness in this evolving technological development and explore current initiatives, challenges, and potential strategies required to safeguard sensitive information in the age of LLMs.

Contextualizing Nigeria’s Digital Transformation

The Information and Communications Technology (ICT) sector drives the rapid digitisation of various sectors in Nigeria. This sector plays a vital role in the country’s economic recovery, contributing 9.88% to the GDP in Q4 of 2021. Nigeria’s ICT market is the largest in Africa. It is projected to experience significant growth in the next few years as the wave of technological advancement is sweeping across every sector. The government recognises the importance of this sector and encourages partnerships with foreign investors. Furthermore, the Federal Ministry of Communications and Digital Economy has launched a national policy to diversify the economy. The ICT sector contributes about 17.47% to the country’s GDP. It is reshaping the economic landscape of Nigeria and Africa through digital transformation.

Against this backdrop, there is much reliance on data-driven technologies for economic growth and development in reshaping the economic landscape of Nigeria and Africa. Nigeria’s Information and Communications Technology (ICT) sector drives this transformation. Data and information have become significant drivers of economic growth and sustainable development in the 21st century. The lack of data management systems in many African countries, including Nigeria, has led to negative consequences such as economic hardship and poverty. Recognising the importance of data-driven policies, the Nigerian government is partnering with operators to protect consumer data and ensure a secure economic environment. The National Information Technology Development Agency (NITDA) is actively working to promote a data-driven economy and national database development in Nigeria.

Overview of existing data protection laws on LLM in Nigeria

As of today, there is no specific law or regulation in Nigeria that addresses large language models or artificial intelligence systems. However, Nigeria enacted the Nigeria Data Protection Act in June 2023, which aims to protect personal data and promote the use of data-driven technologies for economic growth and development. While this Act focuses on data protection and privacy, it does not explicitly address the regulation of LLMs or AI systems. It is important to note that regulating AI and large language models is a global concern, and various countries are proposing laws to address their risks and impacts. While LLMs and AI pose risks related to privacy and security, regulations such as the EU AI Act, UK AI Act, and Canada Artificial Intelligence and Data Act (AIDA) suggest the proactiveness of other jurisdictions to regulate this space. These laws aim to regulate the development and use of AI systems, including provisions for privacy and cybersecurity.

Conversely, the NDPA and NDPR have not given attention to the potential effects of generative AI on the rights and freedom of data subjects. Key clauses regarding algorithmic accountability and fairness are absent from Nigeria’s data privacy framework. Without such provisions in the data protection regulations and laws, holding algorithms accountable will remain uncontrollable.

Likewise, there is no stipulated obligation to request a significant data controller to perform an Algorithm Impact Assessment in cases where training LLMs can pose a risk of data privacy breach. Similarly, a requirement for assessing algorithm designs could have averted numerous privacy breaches in LLMs. Unfortunately, this provision was also overlooked in the pertinent legal frameworks.

Challenges Posed by Large Language Models

LLMs can affect personal data negatively in Nigeria. These models, such as ChatGPT and Google’s Bard, can process and analyse massive amounts of personal data. The risks associated with LLMs include data privacy concerns, model bias and discrimination, security vulnerabilities, and data misuse.

If not properly regulated and managed, LLMs can lead to unauthorised access and misuse of personal data, compromising the privacy of individuals. Data subjects may also risk using their personal information in a biased or discriminatory manner, as large language models can inadvertently amplify existing biases present in the data they are trained on.

More so, the lack of transparency and accountability in LLMs can make it difficult for data subjects to understand how their data is being processed and used, compromising their rights to privacy and control over their data.

While Nigeria does not have specific laws or regulations addressing LLMs, the Nigeria Data Protection Act 2023 provides guidelines and regulations for collecting, processing, storing, and sharing personal data, aiming to protect individuals’ rights regarding their data.

Obtaining consent and transparency in data collection and processing is another main challenge, especially when training LLM. To ensure consent and transparency in data collection and usage of LLMs in Nigeria, a data controller/processor should adhere to the Nigeria Data Protection Act and its principles. The Act requires that consent be freely given, specific, informed, and unambiguous, similar to the consent requirements under the Nigeria Data Protection Regulation (NDPR). A data controller/processor must inform individuals about the purpose and scope of data collection and the intended use of LLMs in processing their data. Data subjects must obtain explicit consent before collecting and using their personal data.

However, the procedure for obtaining consent can be vague as users may only sometimes be fully aware of how their data is used. For instance, most users do not know that their interactions with ChatGPT are being stored and analysed. What about the data collected to train the LLM? The potential for LLMs to amplify existing biases in data is a daunting risk to data protection in the age of AI. Like many machine learning systems, LLMs learn from vast amounts of historical data, which often contain inherent societal prejudices and biases. These biases can be perpetuated and even magnified when LLMs generate text or make decisions. For instance, if the training data contains biased language or stereotypes, LLMs may inadvertently produce content reinforcing these biases. This poses a significant threat to data protection, as individuals may face discriminatory outcomes in automated decision-making processes, and their privacy rights may be compromised due to the perpetuation of prejudiced narratives.

Several strategies have been developed to mitigate this risk in AI systems. To guarantee that training data more accurately represents the diversity of society, diverse and representative data gathering is necessary. More specifically, biases in the outputs of LLMs can be found and reduced using robust bias detection and correction procedures. To promote responsible use and reduce bias, ethical frameworks and principles may be incorporated into AI development. In order to ensure that LLMs maintain fairness and data protection, collaboration between the government, business, and other stakeholders is also essential. This is because it enables the establishment of industry standards, open practices, and independent audits, which improves trust and accountability in using LLMs.

Current Government and Industry Initiatives and Practices

Although there are currently no laws that regulate LLM in Nigeria, the National Information Technology Development Agency (NITDA) is leading some work in this area through the National Centre for Artificial Intelligence and Robotics (NCAIR) and other stakeholders. This collaboration has given birth to the draft of the National Policy on Artificial Intelligence. In furtherance of this course, NITDA has invited experts to collaborate in developing the National AI Strategy. In the same vein, the UK government has agreed to partner with Nigeria on the security and safety of AI.

Best practices in handling sensitive information in the era of LLMs

Given that LLMs are trained with data, especially personal data. Users do use personal information in LLM prompts. So it is imperative to ensure the privacy and security of data not just in training LLMs but also while using the generative AI. Personal data can be secured in LLMs by adopting the following best practices:

i. Data MinimisationThe principle of data minimisation requires that the personal data collected by the data controller/processor is limited to only the relevant data necessary for the stated purpose of processing. Given that it is virtually impracticable to align with this principle since there is a need for a large volume of data to train the LLMs, the end-user of Generative AI must help mitigate the risk attached to training LLMs with extensive data. A profound best practice is limiting the personal data of an end-user in any prompts adopted.

ii. Anonymisation Where models must learn from sensitive data, anonymisation is a best practice. Anonymisation is the modifying or removing personally identifiable information that may lead to identifying a data subject while maintaining the usability of the data. Although anonymisation is a profound suggestion, it is crucial to note that it does not address the concern of data collection, purpose.

iii. Regular Audit/ Penetration Testing

Similar to testing the security infrastructure of the data storage mechanism, monitoring LLM logs is also crucial to identify the vulnerabilities and mitigate any risk identified.

iv. Regular Updates

For optimum performance and to keep an AI application safe from vulnerabilities, there is a need for constant updates. This responsibility lies more on the end user of the AI tool as soon as a new update is released. This addresses vulnerability issues that may be spotted in an existing application.

v. Ethical Consideration

Training LLMs has raised ethical considerations on data processing and the risk of data misuse. To address this menace, there is a need to adopt transparency, fairness, and accountability to ensure that data processing in LLMs is conducted responsibly

Recommendations for Strengthening Data Protection Readiness

First, there is a need for an improvement on the existing data protection law in Nigeria. This is a crucial step to ensure a comprehensive data protection framework in the country. The new provisions will align with international data protection frameworks and address the unique challenges LLMs pose. The updated law would introduce regulations tailored explicitly to LLMs, including guidelines for their use and limitations. It will also establish mechanisms for oversight and enforcement to ensure compliance with these regulations. By addressing the impact of LLMs on personal data, it would suffice to say that Nigeria is proactive in protecting the privacy rights of Nigerians.

Collaboration between government, industry, and civil society is crucial to ensure comprehensive data protection in LLMs. Establishing common standards that govern the use and handling of personal data in LLMs should be a priority. This could be achieved through joint initiatives focusing on researching, developing, and implementing robust data protection measures tailored to LLMs. It is discernable that the current administration is working to ensure guidelines and regulations that address the unique challenges posed by LLMs, ensuring the responsible use of personal data and enhancing privacy rights; however, issues bothering data privacy should be addressed.

As the country develops its AI Policy and the Data Protection Act, it should consider the unique challenges posed by LLMs and establish tailored regulations. These regulations should include guidelines for the responsible use of LLMs, limitations, mechanisms for oversight and enforcement, and clear guidelines for their use in various sectors. Collaboration between government, industry, and civil society can also establish common standards and joint initiatives for research, development, and implementation of robust data protection measures for LLMs.

Conclusion

As Nigeria continues its digital transformation journey, addressing the evolving challenges posed by LLMs is imperative. By developing holistic legal frameworks, enhancing partnerships, and investing in research, Nigeria can foster its data protection readiness and ensure that the benefits of AI and LLMs are harnessed in a manner that respects individual privacy and societal well-being. The collective effort of government, industry, and civil society is pivotal in shaping a secure and prosperous digital future for Nigeria.

Lawyard is a legal media and services platform that provides enlightenment and access to legal services to members of the public (individuals and businesses) while also availing lawyers of needed information on new trends and resources in various areas of practice.